Loading data Into Clickhouse

In the previous post I discussed about TPCDS Data generation you can find details here

The dataset I’m working with is broken up into 121 .dat files.

[root@aavin-dev tpcds-data]# ls -l total 225027664 -rw-r--r-- 1 root root 1946 Apr 1 21:42 call_center_1_8.dat -rw-r--r-- 1 root root 1631792 Apr 1 21:42 catalog_page_1_8.dat -rw-r--r-- 1 root root 412557275 Apr 1 22:05 catalog_returns_1_8.dat -rw-r--r-- 1 root root 415381370 Apr 1 22:05 catalog_returns_2_8.dat -rw-r--r-- 1 root root 416588434 Apr 1 22:05 catalog_returns_3_8.dat -rw-r--r-- 1 root root 419113946 Apr 1 22:05 catalog_returns_4_8.dat -rw-r--r-- 1 root root 419618946 Apr 1 22:05 catalog_returns_5_8.dat -rw-r--r-- 1 root root 419668782 Apr 1 22:05 catalog_returns_6_8.dat -rw-r--r-- 1 root root 419095133 Apr 1 22:05 catalog_returns_7_8.dat -rw-r--r-- 1 root root 419423486 Apr 1 22:05 catalog_returns_8_8.dat -rw-r--r-- 1 root root 5663066111 Apr 1 22:05 catalog_sales_1_8.dat -rw-r--r-- 1 root root 5694430964 Apr 1 22:05 catalog_sales_2_8.dat -rw-r--r-- 1 root root 5708207496 Apr 1 22:05 catalog_sales_3_8.dat -rw-r--r-- 1 root root 5725561193 Apr 1 22:05 catalog_sales_4_8.dat -rw-r--r-- 1 root root 5733999632 Apr 1 22:05 catalog_sales_5_8.dat -rw-r--r-- 1 root root 5733022509 Apr 1 22:05 catalog_sales_6_8.dat -rw-r--r-- 1 root root 5734401942 Apr 1 22:05 catalog_sales_7_8.dat -rw-r--r-- 1 root root 5734386947 Apr 1 22:05 catalog_sales_8_8.dat -rw-r--r-- 1 root root 113931783 Apr 1 21:46 customer_1_4.dat -rw-r--r-- 1 root root 113931783 Apr 1 22:05 customer_1_8.dat -rw-r--r-- 1 root root 47182604 Apr 1 21:46 customer_address_1_4.dat -rw-r--r-- 1 root root 47182604 Apr 1 22:06 customer_address_1_8.dat -rw-r--r-- 1 root root 19851495 Apr 1 21:46 customer_demographics_1_4.dat -rw-r--r-- 1 root root 9870125 Apr 1 22:06 customer_demographics_1_8.dat -rw-r--r-- 1 root root 19959800 Apr 1 21:46 customer_demographics_2_4.dat -rw-r--r-- 1 root root 9981370 Apr 1 22:05 customer_demographics_2_8.dat -rw-r--r-- 1 root root 20401801 Apr 1 21:45 customer_demographics_3_4.dat -rw-r--r-- 1 root root 9979830 Apr 1 22:05 customer_demographics_3_8.dat -rw-r--r-- 1 root root 20447000 Apr 1 21:45 customer_demographics_4_4.dat -rw-r--r-- 1 root root 9979970 Apr 1 22:05 customer_demographics_4_8.dat -rw-r--r-- 1 root root 10181031 Apr 1 22:05 customer_demographics_5_8.dat -rw-r--r-- 1 root root 10220770 Apr 1 22:05 customer_demographics_6_8.dat -rw-r--r-- 1 root root 10223430 Apr 1 22:05 customer_demographics_7_8.dat -rw-r--r-- 1 root root 10223570 Apr 1 22:05 customer_demographics_8_8.dat -rw-r--r-- 1 root root 10317438 Apr 1 21:46 date_dim_1_4.dat -rw-r--r-- 1 root root 10317438 Apr 1 22:06 date_dim_1_8.dat -rw-r--r-- 1 root root 83 Apr 1 23:08 dbgen_version_1_4.dat -rw-r--r-- 1 root root 83 Apr 1 22:57 dbgen_version_1_8.dat -rw-r--r-- 1 root root 151653 Apr 1 21:46 household_demographics_1_4.dat -rw-r--r-- 1 root root 151653 Apr 1 22:06 household_demographics_1_8.dat -rw-r--r-- 1 root root 328 Apr 1 21:46 income_band_1_4.dat -rw-r--r-- 1 root root 328 Apr 1 22:06 income_band_1_8.dat -rw-r--r-- 1 root root 106488091 Apr 1 21:47 inventory_1_4.dat -rw-r--r-- 1 root root 53244185 Apr 1 22:06 inventory_1_8.dat -rw-r--r-- 1 root root 106493017 Apr 1 21:46 inventory_2_4.dat -rw-r--r-- 1 root root 53243906 Apr 1 22:05 inventory_2_8.dat -rw-r--r-- 1 root root 106492279 Apr 1 21:46 inventory_3_4.dat -rw-r--r-- 1 root root 53246515 Apr 1 22:05 inventory_3_8.dat -rw-r--r-- 1 root root 106489391 Apr 1 21:46 inventory_4_4.dat -rw-r--r-- 1 root root 53246502 Apr 1 22:05 inventory_4_8.dat -rw-r--r-- 1 root root 53245836 Apr 1 22:05 inventory_5_8.dat -rw-r--r-- 1 root root 53246443 Apr 1 22:05 inventory_6_8.dat -rw-r--r-- 1 root root 53245801 Apr 1 22:05 inventory_7_8.dat -rw-r--r-- 1 root root 53243590 Apr 1 22:05 inventory_8_8.dat -rw-r--r-- 1 root root 9014074 Apr 1 21:47 item_1_4.dat -rw-r--r-- 1 root root 9014074 Apr 1 22:06 item_1_8.dat -rw-r--r-- 1 root root 46526 Apr 1 21:47 promotion_1_4.dat -rw-r--r-- 1 root root 46526 Apr 1 22:06 promotion_1_8.dat -rw-r--r-- 1 root root 1370 Apr 1 21:47 reason_1_4.dat -rw-r--r-- 1 root root 1370 Apr 1 22:06 reason_1_8.dat -rw-r--r-- 1 root root 1113 Apr 1 21:47 ship_mode_1_4.dat -rw-r--r-- 1 root root 1113 Apr 1 22:06 ship_mode_1_8.dat -rw-r--r-- 1 root root 29896 Apr 1 21:47 store_1_4.dat -rw-r--r-- 1 root root 29896 Apr 1 22:06 store_1_8.dat -rw-r--r-- 1 root root 1274693116 Apr 1 22:59 store_returns_1_4.dat -rw-r--r-- 1 root root 636371540 Apr 1 22:44 store_returns_1_8.dat -rw-r--r-- 1 root root 1285696254 Apr 1 22:59 store_returns_2_4.dat -rw-r--r-- 1 root root 638321576 Apr 1 22:43 store_returns_2_8.dat -rw-r--r-- 1 root root 1287978462 Apr 1 22:59 store_returns_3_4.dat -rw-r--r-- 1 root root 642181285 Apr 1 22:44 store_returns_3_8.dat -rw-r--r-- 1 root root 1287065805 Apr 1 22:59 store_returns_4_4.dat -rw-r--r-- 1 root root 643514969 Apr 1 22:43 store_returns_4_8.dat -rw-r--r-- 1 root root 643768298 Apr 1 22:43 store_returns_5_8.dat -rw-r--r-- 1 root root 644210164 Apr 1 22:43 store_returns_6_8.dat -rw-r--r-- 1 root root 643747833 Apr 1 22:43 store_returns_7_8.dat -rw-r--r-- 1 root root 643317972 Apr 1 22:44 store_returns_8_8.dat -rw-r--r-- 1 root root 15038020977 Apr 1 22:59 store_sales_1_4.dat -rw-r--r-- 1 root root 7511562680 Apr 1 22:44 store_sales_1_8.dat -rw-r--r-- 1 root root 15144944067 Apr 1 22:59 store_sales_2_4.dat -rw-r--r-- 1 root root 7526458297 Apr 1 22:43 store_sales_2_8.dat -rw-r--r-- 1 root root 15159344776 Apr 1 22:59 store_sales_3_4.dat -rw-r--r-- 1 root root 7567513662 Apr 1 22:44 store_sales_3_8.dat -rw-r--r-- 1 root root 15158612490 Apr 1 22:59 store_sales_4_4.dat -rw-r--r-- 1 root root 7577430405 Apr 1 22:43 store_sales_4_8.dat -rw-r--r-- 1 root root 7579343490 Apr 1 22:43 store_sales_5_8.dat -rw-r--r-- 1 root root 7580001286 Apr 1 22:43 store_sales_6_8.dat -rw-r--r-- 1 root root 7580943904 Apr 1 22:43 store_sales_7_8.dat -rw-r--r-- 1 root root 7577668586 Apr 1 22:44 store_sales_8_8.dat -rw-r--r-- 1 root root 5107780 Apr 1 22:59 time_dim_1_4.dat -rw-r--r-- 1 root root 5107780 Apr 1 22:44 time_dim_1_8.dat -rw-r--r-- 1 root root 585 Apr 1 22:59 warehouse_1_4.dat -rw-r--r-- 1 root root 585 Apr 1 22:44 warehouse_1_8.dat -rw-r--r-- 1 root root 19333 Apr 1 22:59 web_page_1_4.dat -rw-r--r-- 1 root root 19333 Apr 1 22:44 web_page_1_8.dat -rw-r--r-- 1 root root 385713161 Apr 1 23:08 web_returns_1_4.dat -rw-r--r-- 1 root root 192075934 Apr 1 22:57 web_returns_1_8.dat -rw-r--r-- 1 root root 386753441 Apr 1 23:08 web_returns_2_4.dat -rw-r--r-- 1 root root 193637227 Apr 1 22:57 web_returns_2_8.dat -rw-r--r-- 1 root root 386715245 Apr 1 23:08 web_returns_3_4.dat -rw-r--r-- 1 root root 193369864 Apr 1 22:57 web_returns_3_8.dat -rw-r--r-- 1 root root 387315801 Apr 1 23:08 web_returns_4_4.dat -rw-r--r-- 1 root root 193383577 Apr 1 22:57 web_returns_4_8.dat -rw-r--r-- 1 root root 193474601 Apr 1 22:57 web_returns_5_8.dat -rw-r--r-- 1 root root 193240644 Apr 1 22:57 web_returns_6_8.dat -rw-r--r-- 1 root root 193453842 Apr 1 22:57 web_returns_7_8.dat -rw-r--r-- 1 root root 193861959 Apr 1 22:57 web_returns_8_8.dat -rw-r--r-- 1 root root 5690073784 Apr 1 23:08 web_sales_1_4.dat -rw-r--r-- 1 root root 2837992809 Apr 1 22:57 web_sales_1_8.dat -rw-r--r-- 1 root root 5704569076 Apr 1 23:08 web_sales_2_4.dat -rw-r--r-- 1 root root 2852080975 Apr 1 22:57 web_sales_2_8.dat -rw-r--r-- 1 root root 5704984755 Apr 1 23:08 web_sales_3_4.dat -rw-r--r-- 1 root root 2852233860 Apr 1 22:57 web_sales_3_8.dat -rw-r--r-- 1 root root 5703904801 Apr 1 23:08 web_sales_4_4.dat -rw-r--r-- 1 root root 2852335216 Apr 1 22:57 web_sales_4_8.dat -rw-r--r-- 1 root root 2852056830 Apr 1 22:57 web_sales_5_8.dat -rw-r--r-- 1 root root 2852927925 Apr 1 22:57 web_sales_6_8.dat -rw-r--r-- 1 root root 2851698530 Apr 1 22:57 web_sales_7_8.dat -rw-r--r-- 1 root root 2852206271 Apr 1 22:57 web_sales_8_8.dat -rw-r--r-- 1 root root 9879 Apr 1 23:08 web_site_1_4.dat -rw-r--r-- 1 root root 9879 Apr 1 22:57 web_site_1_8.dat [root@aavin-dev tpcds-data]# ls -l | wc -l 121 [root@aavin-dev tpcds-data]# cd [root@aavin-dev ~]# du -h tpcds-data 215G tpcds-data

The data generated by TPDS data gen will be of .dat format,but we can import only the formats supported by clickhouse of which there is no option for .dat format.

You can find those details here

.dat to .csv

Since I got all the data in .dat format and have to change to .csv format. As a data wrangler i was just curios to cat it and see what actually i got.

[root@aavin-dev tpcds-data]# cat catalog_returns_1_8.dat | wc -l 2701485 [root@aavin-dev tpcds-data]# head catalog_returns_1_8.dat 2450926|45816|386|664601|797995|6189|84583|664601|797995|4703|84583|1|106|2|2|13|2|47|3888.31|233.29|4121.60|91.23|1348.90|3577.24|186.64|124.43|1673.42| 2450946|74710|8176|664601|797995|6189|84583|682809|665550|991|289832|1|17|2|5|27|2|49|2490.18|99.60|2589.78|52.54|1867.39|323.72|931.57|1234.89|2019.53| 2451065|71104|8348|525383|3755|2480|355652|452311|700704|5571|62485|4|7|13|2|25|4|12|64.32|4.50|68.82|22.97|78.60|1.28|55.47|7.57|106.07| 2450954|28638|29678|26801|90299|3797|67495|171681|1168758|7154|10197|2|9|12|3|23|5|20|829.40|49.76|879.16|60.00|308.00|622.05|176.24|31.11|417.76| 2451023|44538|21730|731351|528859|5567|73122|283027|737861|54|302647|1|78|12|5|15|6|6|403.08|36.27|439.35|4.58|199.44|354.71|27.57|20.80|240.29| 2450992|10904|12964|731351|528859|5567|73122|98008|1289384|1410|227436|1|54|7|3|23|6|7|23.17|1.85|25.02|19.85|7.07|6.25|9.64|7.28|28.77| 2451016|60162|12860|777674||||514994|||379335||2||||9|3|413.94|0.00||||318.73|35.22|59.99|185.36| 2450926|30343|3787|191731|1046123|5715|207107|488927|865466|4658|327697|1|65|14|3|1|11|17|1283.67|51.34|1335.01|71.97|58.31|449.28|183.56|650.83|181.62| 2451058|53881|6553|191731|1046123|5715|207107|678053|1827904|2936|421968|1|42|11|5|34|11|9|29.79|1.19|30.98|91.16|59.67|0.00|20.85|8.94|152.02|

Luckily i can cat in to the file and the data is “|”separated , so now it is not so hard to change the .dat extension to .csv. I accomplished with help of script as shown below.

time(for file in $1*.dat ; do mv "$file" "${file%.*}.csv" ; done)

[root@aavin-dev tpcds-data]# time(for file in $1*.dat ; do mv "$file" "${file%.*}.csv" ; done)

real 0m0.147s

user 0m0.105s

sys 0m0.042s

[root@aavin-dev tpcds-data]# ls call_center_1_8.csv customer_demographics_5_8.csv ship_mode_1_4.csv warehouse_1_4.csv catalog_page_1_8.csv customer_demographics_6_8.csv ship_mode_1_8.csv warehouse_1_8.csv catalog_returns_1_8.csv customer_demographics_7_8.csv store_1_4.csv web_page_1_4.csv catalog_returns_2_8.csv customer_demographics_8_8.csv store_1_8.csv web_page_1_8.csv catalog_returns_3_8.csv date_dim_1_4.csv store_returns_1_4.csv web_returns_1_4.csv catalog_returns_4_8.csv date_dim_1_8.csv store_returns_1_8.csv web_returns_1_8.csv catalog_returns_5_8.csv dbgen_version_1_4.csv store_returns_2_4.csv web_returns_2_4.csv catalog_returns_6_8.csv dbgen_version_1_8.csv store_returns_2_8.csv web_returns_2_8.csv catalog_returns_7_8.csv household_demographics_1_4.csv store_returns_3_4.csv web_returns_3_4.csv catalog_returns_8_8.csv household_demographics_1_8.csv store_returns_3_8.csv web_returns_3_8.csv catalog_sales_1_8.csv income_band_1_4.csv store_returns_4_4.csv web_returns_4_4.csv catalog_sales_2_8.csv income_band_1_8.csv store_returns_4_8.csv web_returns_4_8.csv catalog_sales_3_8.csv inventory_1_4.csv store_returns_5_8.csv web_returns_5_8.csv catalog_sales_4_8.csv inventory_1_8.csv store_returns_6_8.csv web_returns_6_8.csv catalog_sales_5_8.csv inventory_2_4.csv store_returns_7_8.csv web_returns_7_8.csv catalog_sales_6_8.csv inventory_2_8.csv store_returns_8_8.csv web_returns_8_8.csv catalog_sales_7_8.csv inventory_3_4.csv store_sales_1_4.csv web_sales_1_4.csv catalog_sales_8_8.csv inventory_3_8.csv store_sales_1_8.csv web_sales_1_8.csv customer_1_4.csv inventory_4_4.csv store_sales_2_4.csv web_sales_2_4.csv customer_1_8.csv inventory_4_8.csv store_sales_2_8.csv web_sales_2_8.csv customer_address_1_4.csv inventory_5_8.csv store_sales_3_4.csv web_sales_3_4.csv customer_address_1_8.csv inventory_6_8.csv store_sales_3_8.csv web_sales_3_8.csv customer_demographics_1_4.csv inventory_7_8.csv store_sales_4_4.csv web_sales_4_4.csv customer_demographics_1_8.csv inventory_8_8.csv store_sales_4_8.csv web_sales_4_8.csv customer_demographics_2_4.csv item_1_4.csv store_sales_5_8.csv web_sales_5_8.csv customer_demographics_2_8.csv item_1_8.csv store_sales_6_8.csv web_sales_6_8.csv customer_demographics_3_4.csv promotion_1_4.csv store_sales_7_8.csv web_sales_7_8.csv customer_demographics_3_8.csv promotion_1_8.csv store_sales_8_8.csv web_sales_8_8.csv customer_demographics_4_4.csv reason_1_4.csv time_dim_1_4.csv web_site_1_4.csv customer_demographics_4_8.csv reason_1_8.csv time_dim_1_8.csv web_site_1_8.csv

Now we got the final result of data in .csv files. Ready to import in to clickhouse

Clickhouse Import

Let me create schema for the tables to be imported

[root@aavin-dev ~]# clickhouse-client ClickHouse client version 20.1.3.7 (official build). Connecting to localhost:9000 as user default. Connected to ClickHouse server version 20.1.3 revision 54431. aavin-dev :) select version(); SELECT version() ┌─version()─┐ │ 20.1.3.7 │ └───────────┘

Tables and Schemas

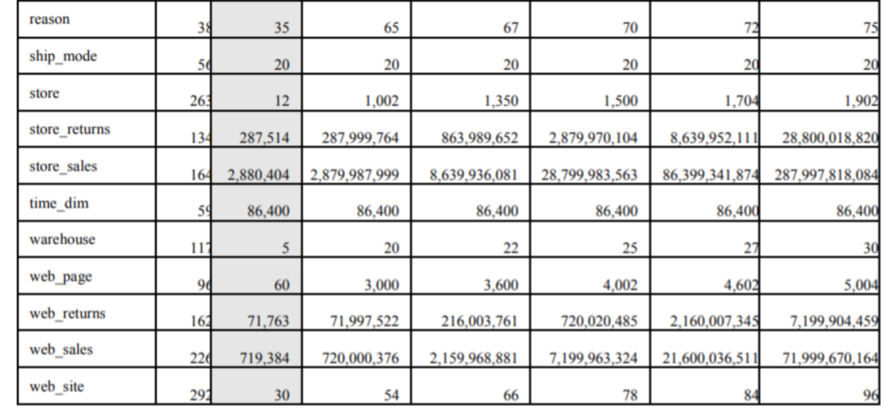

In this benchmark i am going to use tables mentioned in this document.

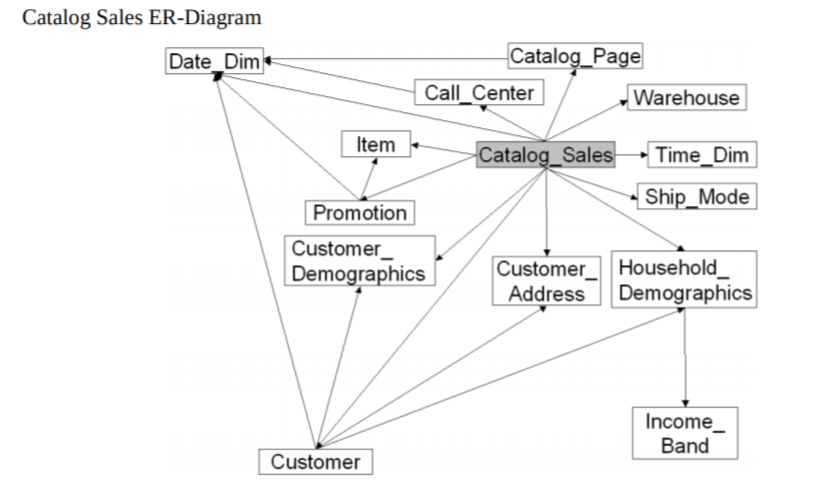

Few DDL queries with ER Diagram can be found below, For complete DDL queries and SQL queries you can refer my gist here

CREATE TABLE tpcdsch.catalog_sales ( `cs_sold_date_sk` Int8, `cs_sold_time_sk` Int8, `cs_ship_date_sk` Int8, `cs_bill_customer_sk` Int8, `cs_bill_cdemo_sk` Int8, `cs_bill_hdemo_sk` Int8, `cs_bill_addr_sk` Int8, `cs_ship_customer_sk` Int8, `cs_ship_cdemo_sk` Int8, `cs_ship_hdemo_sk` Int8, `cs_ship_addr_sk` Int8, `cs_call_center_sk` Int8, `cs_catalog_page_sk` Int8, `cs_ship_mode_sk` Int8, `cs_warehouse_sk` Int8, `cs_item_sk` Int8, `cs_promo_sk` Int8, `cs_order_number` Int8, `cs_quantity` Int8, `cs_wholesale_cost` Float, `cs_list_price` Float, `cs_sales_price` Float, `cs_ext_discount_amt` Float, `cs_ext_sales_price` Float, `cs_ext_wholesale_cost` Float, `cs_ext_list_price` Float, `cs_ext_tax` Float, `cs_coupon_amt` Float, `cs_ext_ship_cost` Float, `cs_net_paid` Float, `cs_net_paid_inc_tax` Float, `cs_net_paid_inc_ship` Float, `cs_net_paid_inc_ship_tax` Float, `cs_net_profit` Float) ENGINE = MergeTree() PARTITION BY (cs_sold_date_sk) ORDER BY (cs_sold_time_sk) SETTINGS index_granularity = 8192

CREATE TABLE tpcdsch.call_center ( `cc_call_center_sk` Int8, `cc_call_center_id` String, `cc_rec_start_date` Date, `cc_rec_end_date` Date, `cc_closed_date_sk` Int8, `cc_open_date_sk` Int8, `cc_name` String, `cc_class` String, `cc_employees` Int8, `cc_sq_ft` Int8, `cc_hours` String, `cc_manager` String, `cc_mkt_id` Int8, `cc_mkt_class` String, `cc_mkt_desc` String, `cc_market_manager` String, `cc_division` Int8, `cc_division_name` String, `cc_company` Int8, `cc_company_name` String, `cc_street_number` String, `cc_street_name` String, `cc_street_type` String, `cc_suite_number` String, `cc_city` String, `cc_county` String, `cc_state` String, `cc_zip` String, `cc_country` String, `cc_gmt_offset` Float, `cc_tax_percentage` Float ) ENGINE = MergeTree() PARTITION BY toYYYYMM(cc_rec_start_date) ORDER BY cc_call_center_sk SETTINGS index_granularity = 8192

CREATE TABLE tpcdsch.date_dim

(

d_date_sk Int8,

d_date_id String,

d_date Int8,

d_month_seq Int8,

d_week_seq Int8,

d_quarter_seg Int8,

d_year Int8,

d_dow Int8,

d_moy Int8,

d_dom Int8,

d_qoy Int8,

d_fy_year Int8,

d_fy_quarter_seq Int8,

d_fy_week_seq Int8,

d_day_name String,

d_quarter_name String,

d_holiday String,

d_weekend String,

d_following_holiday String,

d_first_dom Int8,

d_last_dom Int8,

d_same_day_1y Int8,

d_same_day_1q Int8,

d_current_day String,

d_current_week String,

d_current_month String,

d_current_quarter String,

d_current_year String

)

ENGINE = MergeTree()

PARTITION BY (d_date_sk)

ORDER BY (d_date_id)

SETTINGS index_granularity = 8192;

CREATE TABLE tpcdsch.household_demographics

(

`hd_demo_sk` Int8,

`hd_income_band_sk` Int8,

`hd_buy_potential` String,

`hd_dep_count` Int8,

`hd_vehicle_count` Int8

)

ENGINE = MergeTree()

PARTITION BY tuple()

ORDER BY tuple()

SETTINGS index_granularity = 8192;

CREATE TABLE tpcdsch.item

(

`i_item_sk` Int8,

`i_item_id` String,

`i_rec_start_date` Date,

`i_rec_end_date` Date,

`i_item_desc` String,

`i_current_price` Float,

`i_wholesale_cost` Float,

`i_brand_id` Int8,

`i_brand` String,

`i_class_id` Int8,

`i_class` String,

`i_category_id` Int8,

`i_category` String,

`i_manufact_id` Int8,

`i_manufact` String,

`i_size` String,

`i_formulation` String,

`i_color` String,

`i_units` String,

`i_container` String,

`i_manager_id` Int8,

`i_product_name` String

)

ENGINE = MergeTree()

PARTITION BY (i_item_sk)

ORDER BY (i_item_id)

SETTINGS index_granularity = 8192;

CREATE TABLE tpcdsch.store (

s_store_sk Int8 ,

s_store_id String,

s_rec_start_date date,

s_rec_end_date date,

s_closed_date_sk Int8,

s_store_name String,

s_number_employees Int8,

s_floor_space Int8,

s_hours String,

s_manager String,

s_market_id Int8,

s_geography_class String,

s_market_desc String,

s_market_manager String,

s_division_id Int8,

s_division_name String,

s_company_id Int8,

s_company_name String,

s_street_number String,

s_street_name String,

s_street_type String,

s_suite_number String,

s_city String,

s_county String,

s_state String,

s_zip String,

s_country String,

s_gmt_offset Float,

s_tax_percentage Float

)

ENGINE = MergeTree()

PARTITION BY tuple()

ORDER BY tuple()

SETTINGS index_granularity = 8192;

CREATE TABLE tpcdsch.customer

(

`c_customer_sk` Int8,

`c_customer_id` String,

`c_current_cdemo_sk` Int8,

`c_current_hdemo_sk` Int8,

`c_current_addr_sk` Int8,

`c_first_shipto_date_sk` Int8,

`c_first_sales_date_sk` Int8,

`c_salutation` String,

`c_first_name` String,

`c_last_name` String,

`c_preferred_cust_flag` String,

`c_birth_day` Int8,

`c_birth_month` Int8,

`c_birth_year` Int8,

`c_birth_country` String,

`c_login` String,

`c_email_address` String,

`c_last_review_date` Int8

)

ENGINE = MergeTree()

PARTITION BY c_birth_country

ORDER BY c_first_sales_date_sk

SETTINGS index_granularity = 8192;

CREATE TABLE tpcdsch.store_sales ( ss_sold_date_sk Int8, ss_sold_time_sk Int8, ss_item_sk Int8 , ss_customer_sk Int8, ss_cdemo_sk Int8, ss_hdemo_sk Int8, ss_addr_sk Int8, ss_store_sk Int8, ss_promo_sk Int8, ss_ticket_number Int8 , ss_quantity Int8, ss_wholesale_cost Float, ss_list_price Float, ss_sales_price Float, ss_ext_discount_amt Float, ss_ext_sales_price Float, ss_ext_wholesale_cost Float, ss_ext_list_price Float, ss_ext_tax Float, ss_coupon_amt Float, ss_net_paid Float, ss_net_paid_inc_tax Float, ss_net_profit Float ) ENGINE = MergeTree() PARTITION BY (ss_store_sk) ORDER BY (ss_sold_date_sk) SETTINGS index_granularity = 8192;

CREATE TABLE tpcdsch.web_sales ( ws_sold_date_sk Int8, ws_sold_time_sk Int8, ws_ship_date_sk Int8, ws_item_sk Int8 , ws_bill_customer_sk Int8, ws_bill_cdemo_sk Int8, ws_bill_hdemo_sk Int8, ws_bill_addr_sk Int8, ws_ship_customer_sk Int8, ws_ship_cdemo_sk Int8, ws_ship_hdemo_sk Int8, ws_ship_addr_sk Int8, ws_web_page_sk Int8, ws_web_site_sk Int8, ws_ship_mode_sk Int8, ws_warehouse_sk Int8, ws_promo_sk Int8, ws_order_number Int8 , ws_quantity Int8, ws_wholesale_cost Float, ws_list_price Float, ws_sales_price Float, ws_ext_discount_amt Float, ws_ext_sales_price Float, ws_ext_wholesale_cost Float, ws_ext_list_price Float, ws_ext_tax Float, ws_coupon_amt Float, ws_ext_ship_cost Float, ws_net_paid Float, ws_net_paid_inc_tax Float, ws_net_paid_inc_ship Float, ws_net_paid_inc_ship_tax Float, ws_net_profit Float ) ENGINE = MergeTree() PARTITION BY (ws_warehouse_sk) ORDER BY (ws_sold_date_sk) SETTINGS index_granularity = 8192 ;

Insert into Tables

For catalog_sales table

time (for filename in /root/tpcds-data/catalog_sales_*.csv; do clickhouse-client --format_csv_delimiter="|" --query="INSERT INTO tpcdsch.catalog_sales FORMAT CSV" < $filename; done)

[root@aavin-dev tpcds-data]# time (for filename in /root/tpcds-data/catalog_sales_*.csv; do clickhouse-client --format_csv_delimiter="|" --query="INSERT INTO tpcdsch.catalog_sales FORMAT CSV" < $filename; done) real 9m0.689s user 6m4.536s sys 0m21.296s

SELECT count(*) FROM catalog_sales ┌───count()─┐ │ 215993568 │ └───────────┘ 1 rows in set. Elapsed: 0.003 sec.

Almost 215.993568 Million rows

SELECT

countdistinct(cs_sold_date_sk),

countdistinct(cs_sold_time_sk)

FROM tpcdsch.catalog_sales

┌─uniqExact(cs_sold_date_sk)─┬─uniqExact(cs_sold_time_sk)─┐

│ 256 │ 256 │

└────────────────────────────┴────────────────────────────┘

1 rows in set. Elapsed: 7.149 sec. Processed 215.99 million rows, 431.99 MB (30.21 million rows/s., 60.43 MB/s.)

For call_center

[root@aavin-dev tpcds-data]# time (for filename in /root/tpcds-data/call_center_1_8.csv; do clickhouse-client --format_csv_delimiter="|" --query="INSERT INTO tpcdsch.call_center FORMAT CSV" < $filename; done) real 0m0.331s user 0m0.018s sys 0m0.009s

SELECT count(*) FROM call_center ┌─count()─┐ │ 6 │ └─────────┘ 1 rows in set. Elapsed: 0.002 sec.

For date_dim

[root@aavin-dev tpcds-data]# time (for filename in /root/tpcds-data/date_dim_1*.csv; do clickhouse-client --format_csv_delimiter="|" --query="INSERT INTO tpcdsch.date_dim FORMAT CSV" --max_partitions_per_insert_block=0 < $filename; done) real 0m1.009s user 0m0.223s sys 0m0.040s

SELECT count(*) FROM date_dim ┌─count()─┐ │ 146098 │ └─────────┘

For household_demographics

[root@aavin-dev tpcds-data]# time (for filename in /root/tpcds-data/household_demographics_*.csv; do clickhouse-client --format_csv_delimiter="|" --query="INSERT INTO tpcdsch.household_demographics FORMAT CSV" < $filename; done) real 0m0.268s user 0m0.037s sys 0m0.025s

aavin-dev :) select count(*) from household_demographics; SELECT count(*) FROM household_demographics ┌─count()─┐ │ 14400 │ └─────────┘ 1 rows in set. Elapsed: 0.002 sec.

For item

[root@aavin-dev tpcds-data]# time (for filename in /root/tpcds-data/item_1*.csv; do clickhouse-client --format_csv_delimiter="|" --query="INSERT INTO tpcdsch.item FORMAT CSV" --max_partitions_per_insert_block=0 < $filename; done) real 0m0.816s user 0m0.157s sys 0m0.047s

SELECT count(*) FROM item ┌─count()─┐ │ 64000 │ └─────────┘ 1 rows in set. Elapsed: 0.002 sec.

SELECT

countdistinct(i_item_sk),

countdistinct(i_item_id)

FROM tpcdsch.item

┌─uniqExact(i_item_sk)─┬─uniqExact(i_item_id)─┐

│ 256 │ 16001 │

└──────────────────────┴──────────────────────┘

1 rows in set. Elapsed: 0.023 sec. Processed 64.00 thousand rows, 1.66 MB (2.78 million rows/s., 72.33 MB/s.)

For store

time (for filename in /root/tpcds-data/store_1*.csv; do clickhouse-client --format_csv_delimiter="|" --query="INSERT INTO tpcdsch.store FORMAT CSV" --max_partitions_per_insert_block=50< $filename; done)

aavin-dev :) select count(*) from store; SELECT count(*) FROM store ┌─count()─┐ │ 224 │ └─────────┘ 1 rows in set. Elapsed: 0.002 sec.

For store_sales

[root@aavin-dev tpcds-data]# time (for filename in /root/tpcds-data/store_sales_*.csv; do clickhouse-client --format_csv_delimiter="|" --query="INSERT INTO tpcdsch.store_sales FORMAT CSV" < $filename; done)

aavin-dev :) select count(*) from store_sales; SELECT count(*) FROM store_sales ┌───count()─┐ │ 864006526 │ └───────────┘ 1 rows in set. Elapsed: 0.003 sec.

about 864.006526 Million rows

SELECT

countdistinct(ss_store_sk),

countdistinct(ss_sold_date_sk)

FROM tpcdsch.store_sales

┌─uniqExact(ss_store_sk)─┬─uniqExact(ss_sold_date_sk)─┐

│ 58 │ 256 │

└────────────────────────┴────────────────────────────┘

1 rows in set. Elapsed: 4.736 sec. Processed 864.01 million rows, 1.73 GB (182.42 million rows/s., 364.85 MB/s.)

For web_sales

[root@aavin-dev tpcds-data]# time (for filename in /root/tpcds-data/web_sales_*.csv; do clickhouse-client --format_csv_delimiter="|" --query="INSERT INTO tpcdsch.web_sales FORMAT CSV" < $filename; done) real 12m31.076s user 6m8.092s sys 0m20.862s

SELECT count(*) FROM tpcdsch.web_sales ┌───count()─┐ │ 216012898 │ └───────────┘ 1 rows in set. Elapsed: 0.002 sec.

about 216.012898 Million rows

For table customer

[root@aavin-dev tpcds-data]# time (for filename in /root/tpcds-data/customer_*.csv; do clickhouse-client --format_csv_delimiter="|" --query="INSERT INTO tpcdsch.customer FORMAT CSV" --max_partitions_per_insert_block=0 --input_format_allow_errors_num=10000000 < $filename; done) real 0m22.043s user 0m24.953s sys 0m8.793s

I used a flag “input_format_allow_errors_num=10000000” since some rows has null values.

SELECT count(*) FROM tpcdsch.customer ┌─count()─┐ │ 3400000 │ └─────────┘ 1 rows in set. Elapsed: 0.002 sec.

Final Results

Single table queries used can be found here

Multi table queries used can be found here

| sql Single Table Statement | Time Taken in seconds |

|---|---|

| sql_01 | 0.921 |

| sql_02 | 1.545 |

| sql_03 | 2.277 |

| sql_04 | 1.431 |

| sql_05 | 1.329 |

| sql_06 | 37.023 |

| sql_07 | 36.594 |

| sql_08 | 57.651 |

| sql_09 | 119.007 |

| Total Time | 257.778 |

| sql Multiple Table Queries | Time Taken in seconds |

| sql_01 | 63.72 |

| sql_02 | 93.93 |

| sql_03 | 67.23 |

| sql_04 | 267.48 |

| sql_05 | 101.49 |

| sql_06 | 106.23 |

| sql_07 | 146.82 |

| sql_08 | 63.93 |

| sql_09 | 8.85 |

| sql_10 | 132.36 |

| sql_11 | 103.95 |

| sql_12 | 146.49 |

| sql_13 | 301.23 |

| sql_14 | 30.51 |

| sql_15 | 140.46 |

| Total Time | 1774.68 |

Conclusion

This is my first attempt to benchmark of any OLAP of this kind,even-though i worked in Redshift with multi node cluster bigger than this but the performance offered by single node of this kind was very impressive on a CPU based system.

That being said, running single node ClickHouse server is comparatively easy with respect to cluster using zookeeper. ClickHouse is the fastest calculation in all open source MPP computing frameworks. It is very exciting when doing multi-column tables and querying a large number of rows. However, when doing multi-table joins, Its performance is not as good as a single wide table query.The performance test results show that ClickHouse shows a great performance advantage in single-table query, but the performance is poor in multi-table queries especially in multi table join.As i tried to make a multi table in to a single wide column table (Star –> Flat table) the performance was better with respect to speed and cost,but initially it took substantial amount of time and annoyed with errors like”DB::Exception: Memory limit (for query) exceeded” finally i succeeded by tuning some memory settings for single query found in clickhouse-server settings(getting optimum level of memory setting was tedious since if you allocate more memory for a single query then clickhouse-server will be killed).

Refer “tpcdsch.catalog_sales_flat” table.

With some tuning and leveraging materialised views one can further improve the performance.

Selection of

ENGINE = MergeTree()

PARTITION BY (partition key)

ORDER BY (order by key)

Plays an important role in optimising the query performance, mainly it depends upon business use case and query access patterns,in my case i selected based on queries i used and best practices described in documentation.

One important feature i am fascinated about ClickHouse is clickhouse-local as the doumentation states that the ‘clickhouse-local’ program enables you to perform fast processing on local files, without having to deploy and configure the ClickHouse server.

This is one of the powerful feature where one can use this instead of Spark for data cleaning and preparation in which i relayed upon most.

Thanks for being with me so for, hope you like it, if you felt anything i missed let me know in comments.

References:

Clickhouse — https://clickhouse.tech/docs/en/

TPCDS — http://www.tpc.org/tpc_documents_current_versions/pdf/tpc-ds_v2.11.0.pdf

Creating tpcdsch.catalog_sales_flat FLAT Table

CREATE TABLE tpcdsch.catalog_sales_flat

ENGINE = MergeTree

PARTITION BY cs_sold_date_sk

ORDER BY cs_sold_time_sk AS

SELECT

cs.cs_sold_date_sk AS cs_sold_date_sk,

cs.cs_sold_time_sk AS cs_sold_time_sk,

cs.cs_ship_date_sk AS cs_ship_date_sk,

cs.cs_bill_customer_sk AS cs_bill_customer_sk,

cs.cs_bill_cdemo_sk AS cs_bill_cdemo_sk,

cs.cs_bill_hdemo_sk AS cs_bill_hdemo_sk,

cs.cs_bill_addr_sk AS cs_bill_addr_sk,

cs.cs_ship_customer_sk AS cs_ship_customer_sk,

cs.cs_ship_cdemo_sk AS cs_ship_cdemo_sk,

cs.cs_ship_hdemo_sk AS cs_ship_hdemo_sk,

cs.cs_ship_addr_sk AS cs_ship_addr_sk,

cs.cs_call_center_sk AS cs_call_center_sk,

cs.cs_catalog_page_sk AS cs_catalog_page_sk,

cs.cs_ship_mode_sk AS cs_ship_mode_sk,

cs.cs_warehouse_sk AS cs_warehouse_sk,

cs.cs_item_sk AS cs_item_sk,

cs.cs_promo_sk AS cs_promo_sk,

cs.cs_order_number AS cs_order_number,

cs.cs_quantity AS cs_quantity,

cs.cs_wholesale_cost AS cs_wholesale_cost,

cs.cs_list_price AS cs_list_price,

cs.cs_sales_price AS cs_sales_price,

cs.cs_ext_discount_amt AS cs_ext_discount_amt,

cs.cs_ext_sales_price AS cs_ext_sales_price,

cs.cs_ext_wholesale_cost AS cs_ext_wholesale_cost,

cs.cs_ext_list_price AS cs_ext_list_price,

cs.cs_ext_tax AS cs_ext_tax,

cs.cs_coupon_amt AS cs_coupon_amt,

cs.cs_ext_ship_cost AS cs_ext_ship_cost,

cs.cs_net_paid AS cs_net_paid,

cs.cs_net_paid_inc_tax AS cs_net_paid_inc_tax,

cs.cs_net_paid_inc_ship AS cs_net_paid_inc_ship,

cs.cs_net_paid_inc_ship_tax AS cs_net_paid_inc_ship_tax,

cs.cs_net_profit AS cs_net_profit,

da.d_date_sk AS d_date_sk,

da.d_date_id AS d_date_id,

da.d_date AS d_date,

da.d_month_seq AS d_month_seq,

da.d_week_seq AS d_week_seq,

da.d_quarter_seg AS d_quarter_seg,

da.d_year AS d_year,

da.d_dow AS d_dow,

da.d_moy AS d_moy,

da.d_dom AS d_dom,

da.d_qoy AS d_qoy,

da.d_fy_year AS d_fy_year,

da.d_fy_quarter_seq AS d_fy_quarter_seq,

da.d_fy_week_seq AS d_fy_week_seq,

da.d_day_name AS d_day_name,

da.d_quarter_name AS d_quarter_name,

da.d_holiday AS d_holiday,

da.d_weekend AS d_weekend,

da.d_following_holiday AS d_following_holiday,

da.d_first_dom AS d_first_dom,

da.d_last_dom AS d_last_dom,

da.d_same_day_1y AS d_same_day_1y,

da.d_same_day_1q AS d_same_day_1q,

da.d_current_day AS d_current_day,

da.d_current_week AS d_current_week,

da.d_current_month AS d_current_month,

da.d_current_quarter AS d_current_quarter,

da.d_current_year AS d_current_year,

hd.hd_demo_sk AS hd_demo_sk,

hd.hd_income_band_sk AS hd_income_band_sk,

hd.hd_buy_potential AS hd_buy_potential,

hd.hd_dep_count AS hd_dep_count,

hd.hd_vehicle_count AS hd_vehicle_count,

it.i_item_sk AS i_item_sk,

it.i_item_id AS i_item_id,

it.i_rec_start_date AS i_rec_start_date,

it.i_rec_end_date AS i_rec_end_date,

it.i_item_desc AS i_item_desc,

it.i_current_price AS i_current_price,

it.i_wholesale_cost AS i_wholesale_cost,

it.i_brand_id AS i_brand_id,

it.i_brand AS i_brand,

it.i_class_id AS i_class_id,

it.i_class AS i_class,

it.i_category_id AS i_category_id,

it.i_category AS i_category,

it.i_manufact_id AS i_manufact_id,

it.i_manufact AS i_manufact,

it.i_size AS i_size,

it.i_formulation AS i_formulation,

it.i_color AS i_color,

it.i_units AS i_units,

it.i_container AS i_container,

it.i_manager_id AS i_manager_id,

it.i_product_name AS i_product_name,

cu.c_customer_sk AS c_customer_sk,

cu.c_customer_id AS c_customer_id,

cu.c_current_cdemo_sk AS c_current_cdemo_sk,

cu.c_current_hdemo_sk AS c_current_hdemo_sk,

cu.c_current_addr_sk AS c_current_addr_sk,

cu.c_first_shipto_date_sk AS c_first_shipto_date_sk,

cu.c_first_sales_date_sk AS c_first_sales_date_sk,

cu.c_salutation AS c_salutation,

cu.c_first_name AS c_first_name,

cu.c_last_name AS c_last_name,

cu.c_preferred_cust_flag AS c_preferred_cust_flag,

cu.c_birth_day AS c_birth_day,

cu.c_birth_month AS c_birth_month,

cu.c_birth_year AS c_birth_year,

cu.c_birth_country AS c_birth_country,

cu.c_login AS c_login,

cu.c_email_address AS c_email_address,

cu.c_last_review_date AS c_last_review_date,

cc.cc_call_center_sk AS cc_call_center_sk,

cc.cc_call_center_id AS cc_call_center_id,

cc.cc_rec_start_date AS cc_rec_start_date,

cc.cc_rec_end_date AS cc_rec_end_date,

cc.cc_closed_date_sk AS cc_closed_date_sk,

cc.cc_open_date_sk AS cc_open_date_sk,

cc.cc_name AS cc_name,

cc.cc_class AS cc_class,

cc.cc_employees AS cc_employees,

cc.cc_sq_ft AS cc_sq_ft,

cc.cc_hours AS cc_hours,

cc.cc_manager AS cc_manager,

cc.cc_mkt_id AS cc_mkt_id,

cc.cc_mkt_class AS cc_mkt_class,

cc.cc_mkt_desc AS cc_mkt_desc,

cc.cc_market_manager AS cc_market_manager,

cc.cc_division AS cc_division,

cc.cc_division_name AS cc_division_name,

cc.cc_company AS cc_company,

cc.cc_company_name AS cc_company_name,

cc.cc_street_number AS cc_street_number,

cc.cc_street_name AS cc_street_name,

cc.cc_street_type AS cc_street_type,

cc.cc_suite_number AS cc_suite_number,

cc.cc_city AS cc_city,

cc.cc_county AS cc_county,

cc.cc_state AS cc_state,

cc.cc_zip AS cc_zip,

cc.cc_country AS cc_country,

cc.cc_gmt_offset AS cc_gmt_offset,

cc.cc_tax_percentage AS cc_tax_percentage

FROM tpcdsch.catalog_sales AS cs

INNER JOIN tpcdsch.date_dim AS da ON cs.cs_sold_date_sk = da.d_date

INNER JOIN tpcdsch.household_demographics AS hd ON cs.cs_bill_hdemo_sk = hd.hd_demo_sk

INNER JOIN tpcdsch.item AS it ON cs.cs_item_sk = it.i_item_sk

INNER JOIN tpcdsch.customer AS cu ON cs.cs_ship_customer_sk = cu.c_customer_sk

INNER JOIN tpcdsch.call_center AS cc ON cs.cs_call_center_sk = cc.cc_call_center_sk

0

1 Comment